CISO’s are increasingly turning to AI-enabled security technologies to augment their organizations’ cyber defense and extend the capabilities of their teams.

According to Foundry’s latest Security Priorities Study, 73% of security decision-makers are now more likely to consider a security solution that uses artificial intelligence, up from 59% the year prior.

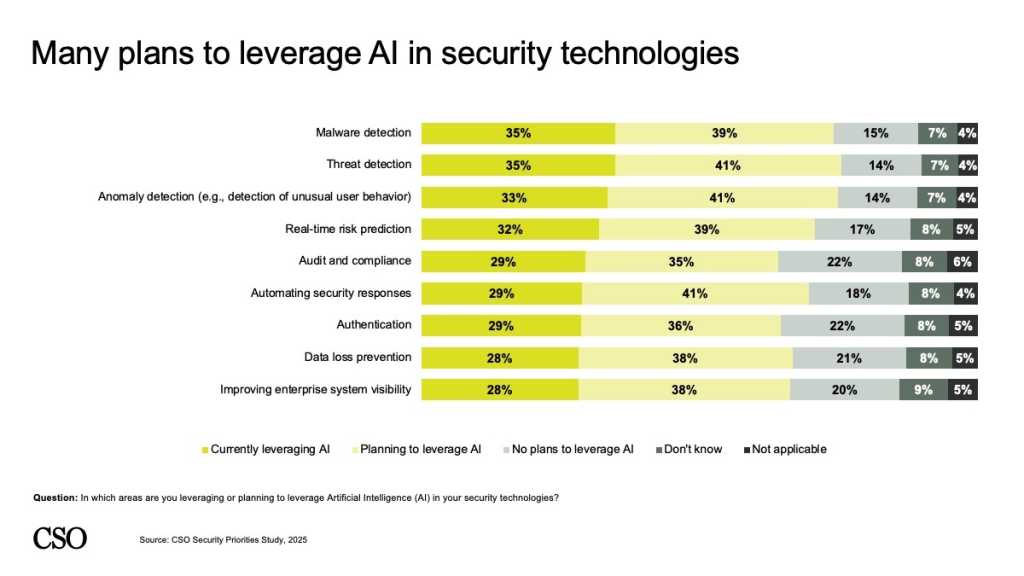

CISOs plan to leverage AI in a range of security functions, including malware detection, threat detection, anomaly detection, real-time risk prediction, and audit and compliance. They are also eyeing AI for automating security responses, performing authentication, ensuring data loss prevention, and improving enterprise system visibility.

CSO

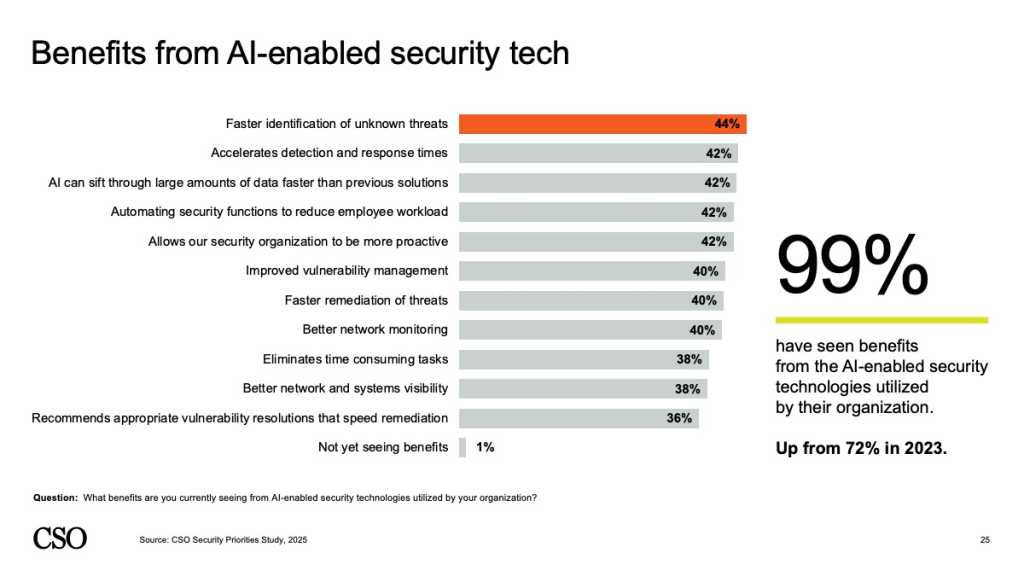

Survey respondents cited AI-enabled benefits such as faster detection of unknown threats, accelerating response times, and automating security tasks to reduce employee workload. The findings are in line with an October 2025 study from management consultancy PwC, which found AI ahead of cloud security and data protection as the top cybersecurity investment priority for enterprises over the next 12 months.

But cutting through the hype to ensure AI investments have optimal impact remains an issue. Experts quizzed by CSO said that security leaders should prioritize AI investment over the next 12 to 18 months to boost anomaly detection, enhance identity and access management, and automate response, but they should also be aware of potential pitfalls such as hallucinations, over-reliance on AI, and governance gaps when implementing AI in their security strategies.

Cutting through the noise

Oliver Newbury, chief strategy officer at cyber resilience vendor Halcyon and previously CISO at Barclays, tells CSO that the “strongest uses of AI in security are the ones that improve visibility and reduce noise.”

“Teams need clearer signals, earlier warnings, and quicker routes to certainty in the middle of an unfolding incident,” Newbury says. “AI that can sift large volumes of activity, highlight meaningful patterns, and present them in a way that analysts can act on immediately is where organizations see real benefit.”

Newbury adds: “These capabilities help shorten investigation time and support faster, confident decision-making during high-pressure situations.”

A human-led approach is no longer sufficient to deal with the increased complexity and volume of threats, many security experts contend. Strategic use of AI technology has the potential to recognize patterns of attack and offer analysts the ability to cut through the noise and make better decisions, freeing up time and resources to deal with higher-value security work and move beyond constant firefighting.

“Most security teams are overloaded, and not because they lack tools, but because they lack time and clear signals,” Newbury says. “AI earns its keep in the areas where speed and pattern recognition genuinely outperform manual effort: behavioral anomaly detection, early-stage threat indicators, and the subtle identity-related activity that often precedes a ransomware event.”

Check against delivery

David Tyler, founder of tech consultancy Outlier Technology, warns that some vendors slap the label ‘AI’ on existing capabilities while adding a higher price tag combined with solid product development and real advances from others.

“A lot of what’s being sold as breakthrough AI is actually decades-old technology finally being implemented properly; this isn’t necessarily bad, as good product management matters as much as novel algorithms,” Tyler says. “But if a vendor’s ‘AI security solution’ appeared overnight in the last couple of years, you’re probably looking at rebranding rather than genuine capability building.”

CSO / Foundry

CSOs should be asking how long the vendor has been investing in these capabilities and what their product evolution looks like, according to Tyler.

“Companies that have been building graph-based correlation, adaptive baselining, and behavioral analytics for a decade are very different from those who just added an AI chat function to their user interface and called it innovation,” Tyler says.

Dr. Andrew Bolster, senior manager of R&D at application security vendor Black Duck, also warns CISOs about vendors slapping neural networks onto existing tools without fixing underlying data quality issues.

“Your AI-powered authentication system is only as good as your identity data hygiene,” Dr. Bolster says. “Your AI-powered malware detection is only as good as your sample corpus quality and labelling accuracy.”

Dr Bolster adds: “Before signing contracts for AI security platforms, audit your data governance maturity.”

Bolster also argues that CISOs themselves should focus more on how to build an AI-ready security data platform rather than which security tools they need to buy.

“CISOs should invest in data mesh architectures that treat security telemetry as a first-class data product with defined ownership, quality SLAs [service level agreements], and standardized schemes,” he says.

Building an AI security platform

Merlin Gillespie, operations director at managed security services provider Cybanetix, sees the AI security market maturing — and moving away from point solutions that offer reduced operational expenditure toward a more integrated approach. Gillespie warns that this shift creates fresh challenges for security leaders.

“These days every security tool has a layer of AI ‘assistance’ but instead of simplifying operations this is creating overlapping tools, inconsistent reporting, and unclear provenance of data,” according to Gillespie. “The learning is that AI-labelled tools are helpful but aren’t a solution in themselves.”

The exercise most organizations face is classifying which processes are eligible for automation, and which of these are enhanced by the determinism and reasoning of AI, Gillespie advises.

“Security teams should apply the same top-down analysis used across their wider business to support software and personnel efficiency, rather than being led by vendor tooling which can result in further siloing,” Gillespie says.

Potential pitfalls

Halcyon’s Newbury warned that these issues were far from the only potential pitfalls that come from deploying AI systems. For example, over-reliance on AI systems can lead to greater risk.

“AI shouldn’t replace the fundamentals such as asset management, patching, identity governance, proper segmentation, or tested recovery plans,” Newbury says. “Those disciplines matter even more as attackers adopt AI at speed.”

Issues inherited from the inadequate training of AI systems can also create problems.

“AI systems can easily inherit blind spots if they’re trained on narrow or unrealistic datasets,” he says. “CISOs must understand how models are trained and where their assumptions break down.”

Ransomware remains the clearest test of whether AI investment is being prioritized in the right places, Newbury argues.

“Most modern [ransomware] incidents are effectively identity-based attacks,” Newbury says. “Attackers are logging in rather than ‘hacking in,’ often armed with credentials harvested at scale by infostealers.”

Newbury adds: “As adversaries fold AI into their operations, you’ll see more of these attacks landing faster and hitting harder. That makes the resilience question far more urgent.”

Newbury concludes: “AI is worth the investment but only where it sharpens decision-making, reduces noise, and gives teams the time and clarity to act before a problem becomes a crisis.”